CPAM : Context-Preserving Adaptive Manipulationfor Zero-Shot Real Image Editing

: Context-Preserving Adaptive Manipulationfor Zero-Shot Real Image Editing

Abstract

Editing natural images using textual descriptions in text-to-image diffusion models remains a significant challenge, particularly in achieving consistent generation and handling complex, non-rigid objects. Existing methods often struggle to preserve textures and identity, require extensive fine-tuning, and exhibit limitations in editing specific spatial regions or objects while retaining background details. This paper proposes Context-Preserving Adaptive Manipulation (CPAM), a novel zero-shot framework for complicated, non-rigid real image editing. Specifically, we propose a preservation adaptation module that adjusts self-attention mechanisms to preserve and independently control the object and background effectively. This ensures that the objects' shapes, textures, and identities are maintained while keeping the background undistorted during the editing process using the mask guidance technique. Additionally, we develop a localized extraction module to mitigate the interference with the non-desired modified regions during conditioning in cross-attention mechanisms. We also introduce various mask-guidance strategies to facilitate diverse image manipulation tasks in a simple manner. Extensive experiments on our newly constructed Image Manipulation BenchmArk (IMBA), a robust benchmark dataset specifically designed for real image editing, demonstrate that our proposed method is the preferred choice among human raters, outperforming existing state-of-the-art editing techniques.

Approach

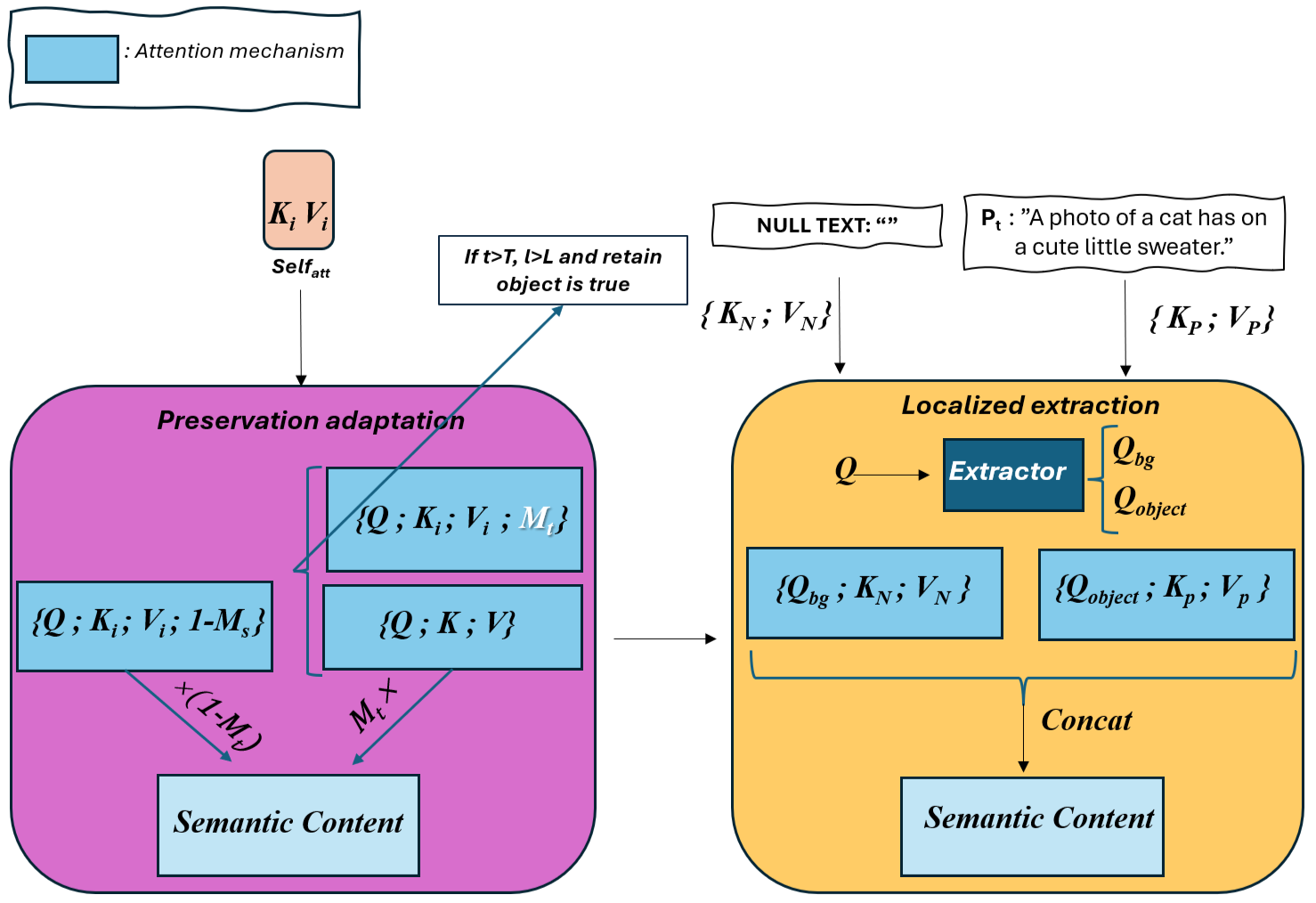

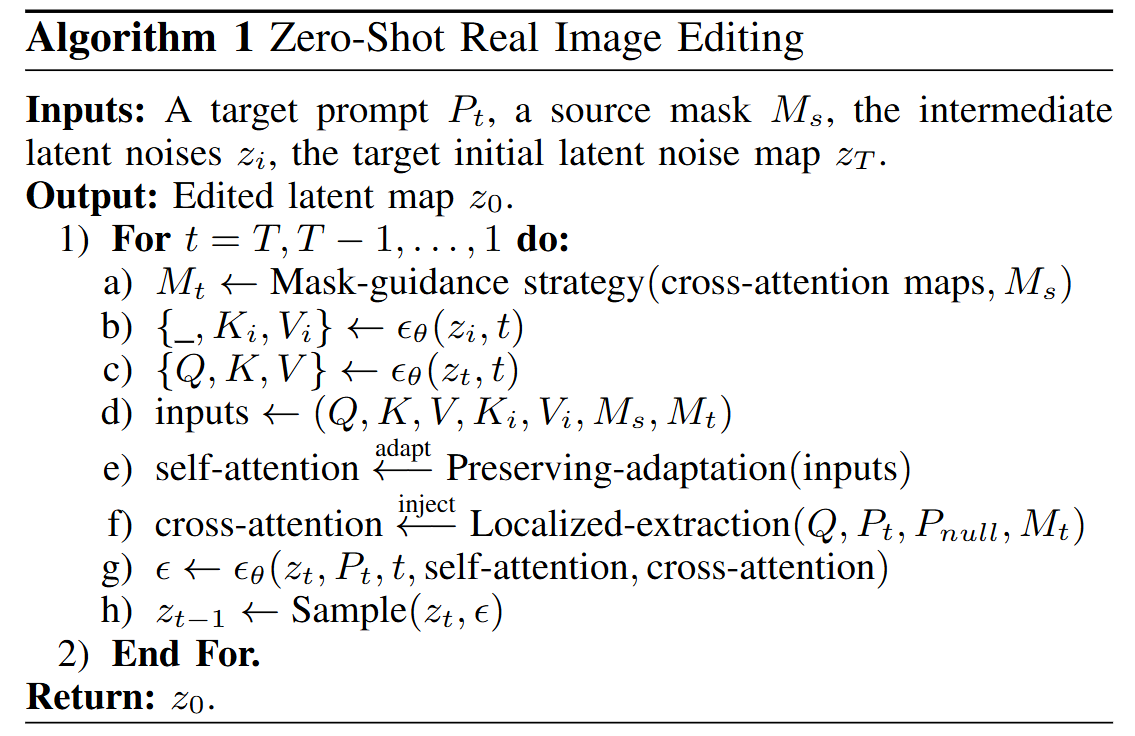

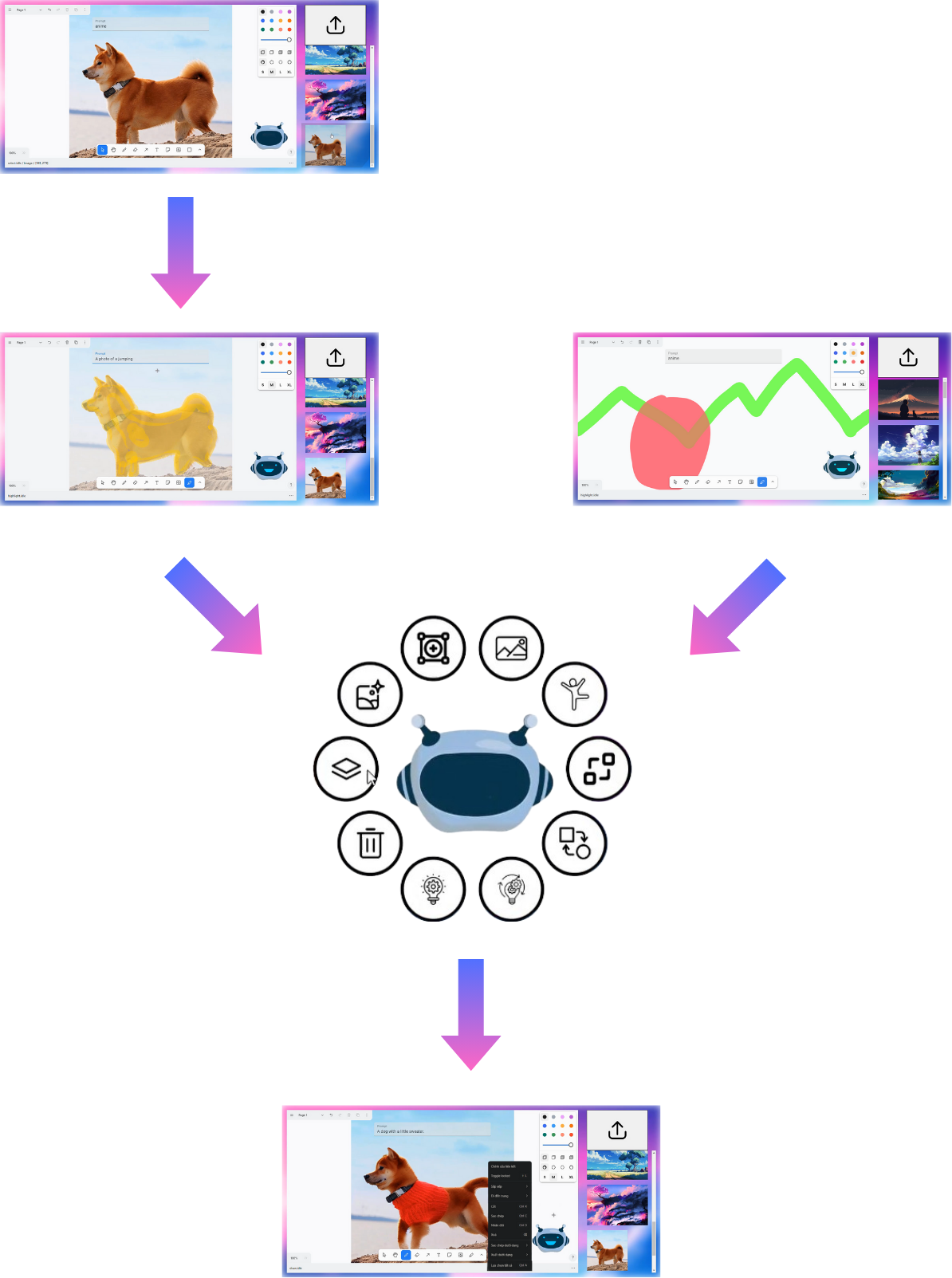

We propose Context-Preserving Adaptive Manipulation (CPAM) to edit an image Is using a source object mask Ms through the MaskInputModule, which can derive the mask in various ways, such as manual drawing, click-based extraction, or text prompts using SAM and a target text prompt Pt to generate a new image It that aligns with Pt. Notably, It may spatially differ from Is, modifying objects or background while keeping other regions unchanged. To achieve this, we introduce a preservation adaptation module that adjusts self-attention to align the semantic content from intermediate latent noise to the current edited noise, ensuring the retention of the original object and background during the editing process. To prevent unwanted changes from the target prompt in non-desired modified regions, we propose a localized extraction module that enables targeted editing while preserving the remaining details. Additionally, we propose mask-guidance strategies for diverse image manipulation tasks. Below are the overall CPAM architecture , and the zero-shot editing algorithm.

Overall Architecture

Detail Mechanism

Zero-Shot Editing Algorithm

Qualitative Comparison

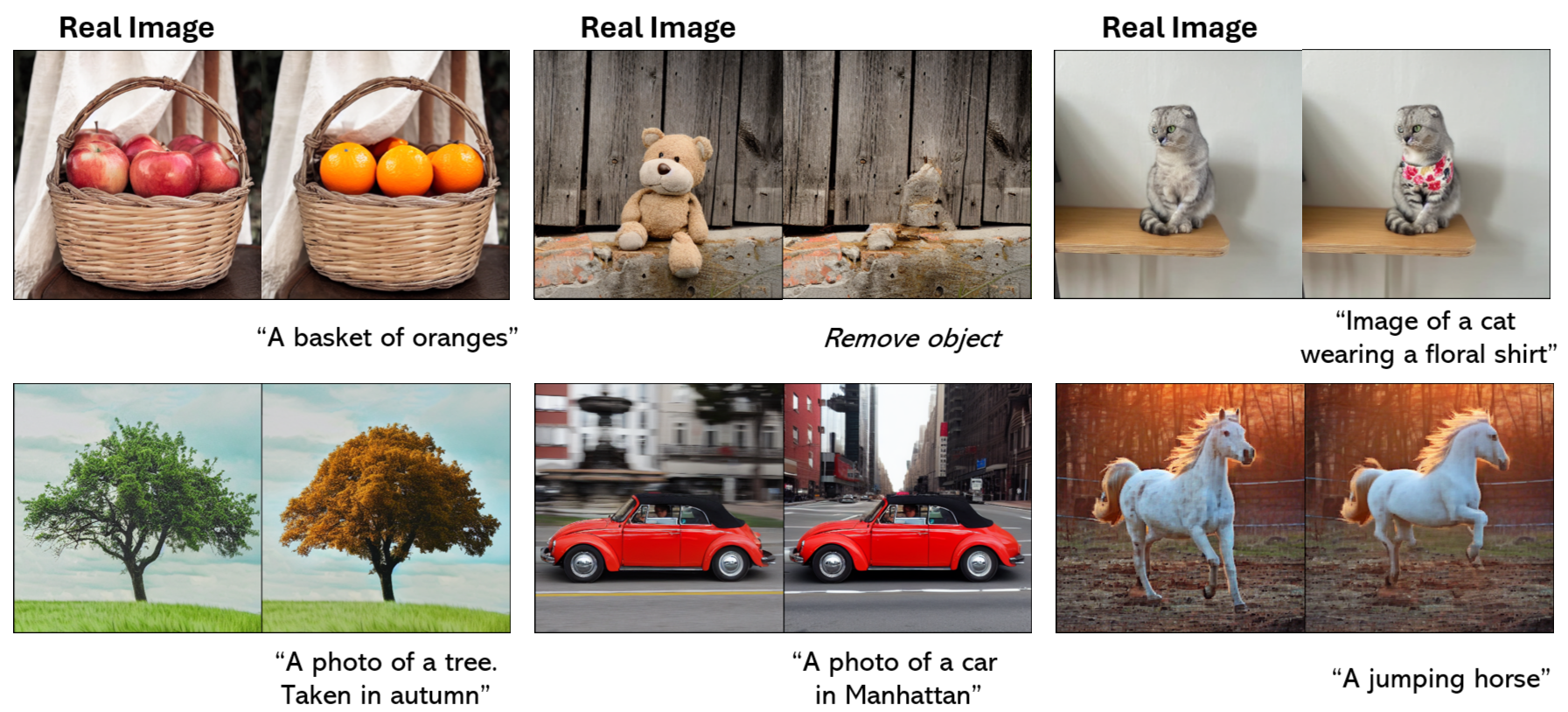

Figure shows a qualitative comparison of CPAM against leading state-of-the-art image editing techniques. Our results demonstrate that CPAM consistently outperforms existing methods across various real image editing tasks, including object replacement, view/pose changes, object removal, background alteration, and addition of new objects. CPAM excels in its ability to modify diverse aspects of images while effectively preserving the original background and avoiding unintended modifications to non-target regions.

Quantitative Comparison

We conduct comprehensive quantitative evaluations and user studies to assess the effectiveness of CPAM against state-of-the-art image editing methods. We evaluate methods using multiple metrics including functional capabilities, text-image alignment (CLIPScore), background preservation (LPIPS), and subjective user ratings across key dimensions. Our evaluation dataset, IMBA (Image Manipulation BenchmArk), comprises 104 carefully curated samples with detailed annotations for diverse editing tasks including object retention, modification, and background alteration.

Functional Capabilities Comparison

Functional comparison across editing methods. ✓ indicates supported features, ✗ indicates not supported. Local Edit: region-specific editing. Obj. Removal: object removal capability. Caption-Free: no original image caption required. Mask Ctrl: mask-based region control. Hi-Guidance: compatibility with high classifier-free guidance scales.

| Method | Local Edit | Obj. Removal | Caption-Free | Mask Ctrl | Hi-Guidance |

|---|---|---|---|---|---|

| SDEdit | ✗ | ✗ | ✓ | ✗ | ✗ |

| MasaCtrl | ✓ | ✗ | ✓ | ✓ | ✗ |

| PnP | ✗ | ✗ | ✗ | ✗ | ✗ |

| FPE | ✗ | ✗ | ✗ | ✗ | ✗ |

| DiffEdit | ✓ | ✗ | ✓ | ✓ | ✗ |

| Pix2Pix-Zero | ✗ | ✗ | ✗ | ✗ | ✗ |

| LEDITS++ | ✓ | ✗ | ✓ | ✓ | ✗ |

| Imagic (FT) | ✗ | ✗ | ✗ | ✗ | ✗ |

| CPAM (Ours) | ✓ | ✓ | ✓ | ✓ | ✓ |

CPAM is the only method that supports all five functional capabilities, demonstrating its versatility in handling diverse image editing tasks.

Comparison with State-of-the-Art Methods

Comparison using CLIPScore (measuring text-image alignment) and LPIPS background (evaluating background preservation). Bold indicates best scores, underline indicates second best.

| Method | CLIPScore ↑ | LPIPS (bg) ↓ |

|---|---|---|

| SDEdit | 28.19 | 0.338 |

| MasaCtrl | 28.82 | 0.223 |

| PnP | 29.03 | 0.162 |

| FPE | 29.02 | 0.152 |

| DiffEdit | 28.58 | 0.148 |

| Pix2Pix-Zero | 27.01 | 0.186 |

| LEDITS++ | 28.74 | 0.141 |

| Imagic (FT) | 30.34 | 0.420 |

| CPAM (Ours) | 29.26 | 0.149 |

CPAM achieves high CLIP score alongside low structure distortion and background preservation, demonstrating superior editing capability.

User Study Results

Participants rated image editing methods on a scale of 1 (very bad) to 6 (very good). Bold indicates best scores, underline indicates second best.

| Method | Object Retention | Background Retention | Realistic | Satisfaction |

|---|---|---|---|---|

| SDEdit | 3.63 | 3.19 | 3.38 | 2.42 |

| MasaCtrl | 4.01 | 4.17 | 4.32 | 3.11 |

| PnP | 4.61 | 4.49 | 4.20 | 2.63 |

| FPE | 4.50 | 4.44 | 4.33 | 2.53 |

| DiffEdit | 4.58 | 4.57 | 4.40 | 3.13 |

| Pix2Pix-Zero | 2.11 | 4.23 | 1.84 | 1.93 |

| LEDIT++ | 4.38 | 4.95 | 4.57 | 3.26 |

| Imagic (FT) | 3.74 | 3.48 | 4.30 | 4.82 |

| CPAM (Ours) | 4.72 | 5.09 | 4.69 | 3.30 |

CPAM significantly outperforms existing methods, achieving the best user satisfaction scores in object retention, background retention, and realism.

Demo Video

Application

CPAM is designed as a general, training-free attention manipulation framework that can be instantiated across diverse image editing scenarios. Below, we present representative systems that build directly on CPAM’s core mechanisms, demonstrating how its principles translate into interactive research prototypes and practical end-user applications as well as object removal and precise region-focused editing, illustrating its extensibility across problem settings.

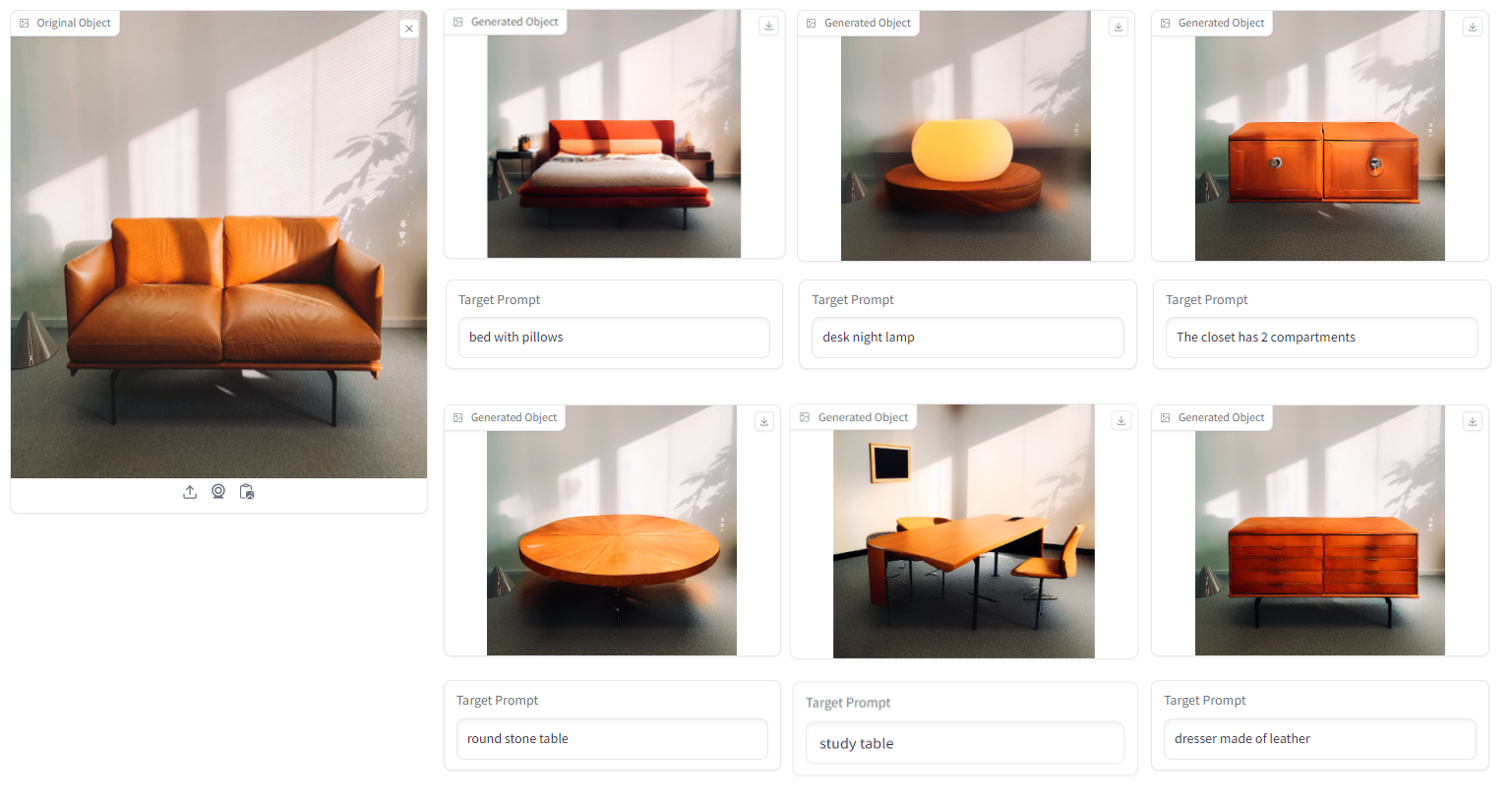

iCONTRA — Interactive Concept Transfer (CHI '24)

iCONTRA further demonstrates CPAM’s applicability to concept-level consistency in creative workflows. It incorporates a CPAM-based zero-shot editing algorithm that progressively integrates visual information from initial exemplars without fine-tuning, enabling coherent concept transfer across generated items. This formulation allows designers to efficiently create high-quality, thematically consistent collections with reduced effort.

EPEdit — Efficient Photo Editor

EPEdit packages CPAM-based zero-shot editing algorithms into a practical end-user system for comprehensive photo manipulation. By leveraging CPAM’s training-free attention control, EPEdit supports a wide range of editing tasks—including object removal, replacement, pose adjustment, background modification, and thematic collection design—while maintaining efficiency, usability, and low deployment cost.

PANDORA — Zero-Shot Object Removal

PANDORA represents the foundational instantiation of CPAM for prompt-free object removal. By operationalizing CPAM’s pixel-wise attention dissolution and localized attentional guidance, PANDORA enables precise, non-rigid, and scalable multi-object erasure in a single pass without fine-tuning or prompt engineering.

Visit PANDORA →

FocusDiff — Target-Aware Refocusing

Building upon the same CPAM principles, FocusDiff extends attention refocusing and preservation mechanisms to region-specific text-guided editing, addressing prompt brittleness, spillover artifacts, and failures on small or cluttered objects. CPAM’s localized preservation strategies naturally generalize to FocusDiff’s refocused cross-attention, further enabling globally consistent editing in challenging settings such as 360° indoor panoramas and virtual reality environments.

CPAM (2025)

@article{vo2025cpam,

title={CPAM: Context-Preserving Adaptive Manipulation for Zero-Shot Real Image Editing},

author={Vo, Dinh-Khoi and Do, Thanh-Toan and Nguyen, Tam V and Tran, Minh-Triet and Le, Trung-Nghia},

journal={arXiv preprint arXiv:2506.18438},

year={2025}

}EPEdit: Redefining Image Editing with Generative AI (2024)

@inproceedings{nguyen2024epedit,

title={EPEdit: Redefining Image Editing with Generative AI and User-Centric Design},

author={Nguyen, Hoang-Phuc and Vo, Dinh-Khoi and Do, Trong-Le and Nguyen, Hai-Dang and Nguyen, Tan-Cong and Nguyen, Vinh-Tiep and Nguyen, Tam V and Le, Khanh-Duy and Tran, Minh-Triet and Le, Trung-Nghia},

booktitle={International Symposium on Information and Communication Technology},

pages={272--283},

year={2024},

organization={Springer}

}iCONTRA: Interactive Concept Transfer (CHI 2024)

@inproceedings{10.1145/3613905.3650788,

author = {Vo, Dinh-Khoi and Ly, Duy-Nam and Le, Khanh-Duy and Nguyen, Tam V. and Tran, Minh-Triet and Le, Trung-Nghia},

title = {iCONTRA: Toward Thematic Collection Design Via Interactive Concept Transfer},

year = {2024},

isbn = {9798400703317},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3613905.3650788},

doi = {10.1145/3613905.3650788},

abstract = {Creating thematic collections in industries demands innovative designs and cohesive concepts. Designers may face challenges in maintaining thematic consistency when drawing inspiration from existing objects, landscapes, or artifacts. While AI-powered graphic design tools offer help, they often fail to generate cohesive sets based on specific thematic concepts. In response, we introduce iCONTRA, an interactive CONcept TRAnsfer system. With a user-friendly interface, iCONTRA enables both experienced designers and novices to effortlessly explore creative design concepts and efficiently generate thematic collections. We also propose a zero-shot image editing algorithm, eliminating the need for fine-tuning models, which gradually integrates information from initial objects, ensuring consistency in the generation process without influencing the background. A pilot study suggests iCONTRA's potential to reduce designers' efforts. Experimental results demonstrate its effectiveness in producing consistent and high-quality object concept transfers. iCONTRA stands as a promising tool for innovation and creative exploration in thematic collection design. The source code will be available at: https://github.com/vdkhoi20/iCONTRA.},

booktitle = {Extended Abstracts of the CHI Conference on Human Factors in Computing Systems},

articleno = {382},

numpages = {8},

keywords = {Diffusion model, Thematic collection design, Zero-shot image editing},

location = {Honolulu, HI, USA},

series = {CHI EA '24}

}Acknowledgment

Funding and GPU Support

This research is funded by the Vietnam National Foundation for Science and Technology Development (NAFOSTED) under Grant Number 102.05-2023.31. This research used the GPUs provided by the Intelligent Systems Lab at the Faculty of Information Technology, University of Science, VNU-HCM.

User Study Participants

We extend our heartfelt gratitude to all 20 participants who took part in our comprehensive user study. Your valuable time, thoughtful feedback, and detailed evaluations across 50 randomly shuffled images were instrumental in validating the effectiveness and usability of our CPAM framework. Your insights helped us understand the practical impact of our zero-shot real image editing approach and provided crucial evidence of its superiority over existing state-of-the-art methods.

Website Design Inspiration

This website design is inspired by ObjectDrop. We thank the authors for their excellent work and creative design approach.